Texturing component - extensions

Extensions introduced in Castle Game Engine related to texturing.

See also documentation of supported nodes of the Texturing component and X3D specification of the Texturing component.

Contents:

- Advanced shading with textures (

CommonSurfaceShader) - Bump mapping (

normalMap,heightMap,heightMapScalefields ofAppearance) - Texture automatically rendered from a viewpoint (

RenderedTexturenode) - Generate texture coordinates on primitives (

Box/Cone/Cylinder/Sphere/Extrusion/Text.texCoord) - Generating 3D tex coords in world space (easy mirrors by additional

TextureCoordinateGenerator.modevalues) - Tex coord generation dependent on bounding box (

TextureCoordinateGenerator.mode= BOUNDS*) - Override alpha channel detection (field

alphaChannelforImageTexture,MovieTextureand other textures) - Movies for

MovieTexturecan be loaded from images sequence - Texture for GUI (

TextureProperties.guiTexture) - Flip the texture vertically at loading (

ImageTexture.flipVertically,MovieTexture.flipVertically) - Multi-texture with only generated children (

MultiGeneratedTextureCoordinate)

1. Advanced shading with textures (CommonSurfaceShader)

|

|

|

CommonSurfaceShader extension is deprecated in our engine now. Instead, use Material node from X3D 4.0, that has texture parameters, normal maps, occlusion maps and more.

The CommonSurfaceShader node can be used inside the Appearance.shaders field, to request an advanced shading for the given shape. The rendering follows the standard Blinn–Phong shading model, with the additional feature that all parameters can be adjusted using the textures.

This allows to vary the shading parameters on a surface. For example you can use specular maps to vary the brightness of the light reflections. You can use normal maps to vary the normal vectors throughout the surface (simulating tiny features of a surface, aka bump mapping).

The node can be considered a "material on steroids", replacing the material and texture information provided in the standard Appearance.texture and Appearance.material fields. It is not a normal "shader" node, as it does not allow you to write an explicit shader code (for this, see programmable shaders nodes or our compositing shaders extension). But it is placed on the Appearance.shaders list, as an alternative to other shader nodes, and it does determine shading.

The tests of this feature are inside demo models bump_mapping/common_surface_shader. It has some manually crafted files, and also X3D exported from Blender using our Blender -> X3D exporter that can handle CommonSurfaceShader (no longer maintained, use glTF now).

Most of the shading parameters are specified using five fields:

xxxFactor(usually ofSFFloatorSFVec3ftype, which means: a single float value or a 3D vector):

Determines the shading parameterxxx.xxxTexture(SFNodetype, usually you can place anyX3DTextureNodehere):

The texture to vary the shading shading parameterxxxthroughout the surface. If specified, this is multiplied with thexxxFactor.xxxTextureCoordinatesId(SFInt32, by default0):

Which texture coordinates determine thexxxTextureplacement. Ignored ifxxxTexturenot assigned.xxxTextureChannelMask(SFString, various defaults):

A mask that says which texture channels determine the shading parameter. Ignored ifxxxTexturenot assigned.xxxTextureId(SFInt32, by default-1):

Ignored by our implementation. (We're also not sure what they do — they are not explained in the paper.)

More information:

- Instant Reality tutorial, nicely presenting the most important features, and linking to other useful resources.

- The

CommonSurfaceShadernode was designed by Instant Reality. See the Instant Reality specification of CommonSurfaceShader. - The node is also implemented in X3DOM. See X3DOM specification of the CommonSurfaceShader (they added some fields). Watch out: some of the default values they put in the "Fields" section are wrong. The default values in that lengthy line

<CommonSurfaceShader...at the top of the page are OK. - It's a really neat design, and Michalis would like to see it available as part of the X3D 4.0 standard :) Since Castle Game Engine 6.2.0, it is the adviced way to use normalmaps, deprecating our previous extensions for bump mapping.

The list of all the fields is below. We do not yet support everything — only the fields marked bold.

CommonSurfaceShader : X3DShaderNode {

SFFloat [in,out] alphaFactor 1

SFInt32 [in,out] alphaTextureId -1

SFInt32 [in,out] alphaTextureCoordinatesId 0

SFString [in,out] alphaTextureChannelMask "a"

SFNode [in,out] alphaTexture NULL # Allowed: X3DTextureNode

SFVec3f [in,out] ambientFactor 0.2 0.2 0.2

SFInt32 [in,out] ambientTextureId -1

SFInt32 [in,out] ambientTextureCoordinatesId 0

SFString [in,out] ambientTextureChannelMask "rgb"

SFNode [in,out] ambientTexture NULL # Allowed: X3DTextureNode

SFVec3f [in,out] diffuseFactor 0.8 0.8 0.8

SFInt32 [in,out] diffuseTextureId -1

SFInt32 [in,out] diffuseTextureCoordinatesId 0

SFString [in,out] diffuseTextureChannelMask "rgb"

SFNode [in,out] diffuseTexture NULL # Allowed: X3DTextureNode

# Added in X3DOM

SFNode [in,out] diffuseDisplacementTexture NULL # Allowed: X3DTextureNode

# Added in X3DOM

SFString [in,out] displacementAxis "y"

SFFloat [in,out] displacementFactor 255.0

SFInt32 [in,out] displacementTextureId -1

SFInt32 [in,out] displacementTextureCoordinatesId 0

SFNode [in,out] displacementTexture NULL # Allowed: X3DTextureNode

SFVec3f [in,out] emissiveFactor 0 0 0

SFInt32 [in,out] emissiveTextureId -1

SFInt32 [in,out] emissiveTextureCoordinatesId 0

SFString [in,out] emissiveTextureChannelMask "rgb"

SFNode [in,out] emissiveTexture NULL # Allowed: X3DTextureNode

SFVec3f [in,out] environmentFactor 1 1 1

SFInt32 [in,out] environmentTextureId -1

SFInt32 [in,out] environmentTextureCoordinatesId 0

SFString [in,out] environmentTextureChannelMask "rgb"

SFNode [in,out] environmentTexture NULL # Allowed: X3DEnvironmentTextureNode

# Added in X3DOM

SFNode [in,out] multiDiffuseAlphaTexture NULL # Allowed: X3DTextureNode

SFNode [in,out] multiEmmisiveAmbientIntensityTexture NULL # Allowed: X3DTextureNode

SFNode [in,out] multiSpecularShininessTexture NULL # Allowed: X3DTextureNode

SFNode [in,out] multiVisibilityTexture NULL # Allowed: X3DTextureNode

SFString [in,out] normalFormat "UNORM" # The default is the only allowed value for now

SFString [in,out] normalSpace "TANGENT" # The default is the only allowed value for now

SFInt32 [in,out] normalTextureId -1

SFInt32 [in,out] normalTextureCoordinatesId 0

SFString [in,out] normalTextureChannelMask "rgb"

SFVec3f [] normalScale 2 2 2

SFVec3f [] normalBias -1 -1 -1

SFNode [in,out] normalTexture NULL # Allowed: X3DTextureNode

# Added in Castle Game Engine

SFFloat [in,out] normalTextureParallaxHeight 0

SFVec3f [in,out] reflectionFactor 0 0 0 # Used only by (classic) ray-tracer for now

SFInt32 [in,out] reflectionTextureId -1

SFInt32 [in,out] reflectionTextureCoordinatesId 0

SFString [in,out] reflectionTextureChannelMask "rgb"

SFNode [in,out] reflectionTexture NULL # Allowed: X3DTextureNode

SFFloat [in,out] shininessFactor 0.2

SFInt32 [in,out] shininessTextureId -1

SFInt32 [in,out] shininessTextureCoordinatesId 0

SFString [in,out] shininessTextureChannelMask "a"

SFNode [in,out] shininessTexture NULL # Allowed: X3DTextureNode

SFVec3f [in,out] specularFactor 0 0 0

SFInt32 [in,out] specularTextureId -1

SFInt32 [in,out] specularTextureCoordinatesId 0

SFString [in,out] specularTextureChannelMask "rgb"

SFNode [in,out] specularTexture NULL # Allowed: X3DTextureNode

SFVec3f [in,out] transmissionFactor 0 0 0 # Used only by (path) ray-tracer for now

SFInt32 [in,out] transmissionTextureId -1

SFInt32 [in,out] transmissionTextureCoordinatesId 0

SFString [in,out] transmissionTextureChannelMask "rgb"

SFNode [in,out] transmissionTexture NULL # Allowed: X3DTextureNode

# Additional fields (not in alphabetical order)

# Affects how normal maps work

SFInt32 [in,out] tangentTextureCoordinatesId -1

SFInt32 [in,out] binormalTextureCoordinatesId -1

# Affects how alphaTexture contents are treated

SFBool [in,out] invertAlphaTexture FALSE

SFFloat [in,out] relativeIndexOfRefraction 1

SFFloat [in,out] fresnelBlend 0

MFBool [] textureTransformEnabled [FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE]

}

The node also contains everything inherited from the standard X3DShaderNode, like isSelected and isValid output events.

Notes to the specific fields above:

You can pack some attributes in a single texture, and use it in multiple X3D fields by the DEF/USE mechanism.

Make sure to use

xxxTextureChannelMaskfields to pick appropriate information from appropriate channels. The defaults are often sensible, e.g.diffuseTextureis from"rgb"whilealphaTextureis from"a", so you can trivially create RGBA texture and put it in both fields. Usually you will want to use different channels for each information.TODO: The current implementation always uses

diffuseTextureas combineddiffuseTexture(rgb) +alphaTexture(a). To keep forward compatibility, if you have an alpha channel indiffuseTexture, always place the same texture asalphaTexture. This will make your models work exactly the same once we implement properalphaTexturehandling.In X3D classic encoding:

CommonSurfaceShader { diffuseTexture DEF MyTexture ImageTexture { ... } alphaTexture USE MyTexture }In X3D XML encoding:

<CommonSurfaceShader> <ImageTexture containerField="diffuseTexture" DEF="MyTexture" ...>...</ImageTexture> <ImageTexture containerField="alphaTexture" USE="MyTexture" /> </CommonSurfaceShader>

Note that X3DOM implementation of

CommonSurfaceShaderseems to have the same bug: thediffuseTextureis used for both diffuse + alpha, andalphaTextureis ignored. So, the buggy behaviors happen to be compatible... but please don't depend on it. Sooner or later, one or both implementations will be fixed, and then thealphaTexturewill be respected correctly.Alternatively, put your texture inside

multiDiffuseAlphaTexture. Note that X3DOM seems to not supportmultiDiffuseAlphaTexture(although it's in their specification).Our engine adds a new field,

normalTextureParallaxHeight, as an extension. Setting this field to non-zero means that:The alpha channel of the

normalTextureshould be interpreted as a height map. This is the same thing as "displacement map", but is used for a different purpose.If the

normalTexturedoesn't have an alpha channel, thenormalTextureParallaxHeightis ignored.It is used to simulate that the surface has some depth, using the parallax bump mapping effect.

You can control the exact effect type in view3dscene using the View -> Bump Mapping -> ... Parallax menu options in view3dscene. You can try it on the common_surface_shader/steep_parallax.x3dv example. Play around with different

normalTextureParallaxHeightvalues and observe how do they affect what you see.

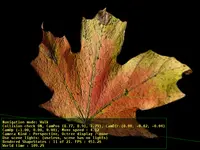

2. Bump mapping (normalMap, heightMap, heightMapScale fields of Appearance)

The approach described below to specify normal maps is DEPRECATED. Instead follow bump mapping documentation. Provide normalmap using glTF or proper field in X3D 4.0 material.

We add to the Appearance node new fields useful for bump mapping:

Appearance : X3DAppearanceNode {

... all previous Appearance fields ...

SFNode [in,out] normalMap NULL # only 2D texture nodes (ImageTexture, MovieTexture, PixelTexture) allowed

SFNode [in,out] heightMap NULL # deprecated; only 2D texture nodes (ImageTexture, MovieTexture, PixelTexture) allowed

SFFloat [in,out] heightMapScale 0.01 # must be > 0

}

|

|

|

|

RGB channels of the texture specified as normalMap describe

normal vector values of the surface. Normal vectors are encoded as colors:

vector (x, y, z) should be encoded as RGB((x+1)/2, (y+1)/2, (z+1)/2).

You can use e.g. GIMP normalmap plugin to generate such normal maps from your textures. Hint: Remember to check "invert y" when generating normal maps, in image editing programs image Y grows down but we want Y (as interpreted by normals) to grow up, just like texture T coordinate.

Such normal map is enough to use the classic bump mapping method, and already enhances the visual look of your scene. For most effective results, you can place some dynamic light source in the scene — the bump mapping effect is then obvious.

You can additionally specify a height map.

Since version 3.10.0 of view3dscene (2.5.0 of engine), this height map

is specified within the alpha channel of the normalMap texture.

This leads to easy and efficient implementation, and also it is easy

for texture creators: in GIMP

normal map plugin just set "Alpha Channel" to "Height".

A height map allows to use more sophisticated parallax bump mapping algorithm,

actually we have a full steep parallax mapping with

self-shadowing implementation. This can make the effect truly

amazing, but also slower.

If the height map (that is, the alpha channel of normalMap)

exists, then we also look at the heightMapScale field.

This allows you to tweak the perceived height of bumps

for parallax mapping.

Since version 3.10.0 of view3dscene (2.5.0 of engine), new shader pipeline allows the bump mapping to cooperate with all normal VRML/X3D lighting and multi-texturing settings. So the same lights and textures are used for bump mapping lighting equations, only they have more interesting normals.

Note that bump mapping only works if you also assigned a normal (2D) texture to your shape. We assume that normal map and height map is mapped on your surface in the same way (same texture coordinates, same texture transform) as the first texture (in case of multi-texturing).

Examples:

Open with view3dscene sample models from our VRML/X3D demo models (see subdirectory

bump_mapping/).You can see this used in The Castle "The Fountain" level. Authors of new levels are encouraged to use bump mapping !

Note: you can also use these fields within KambiAppearance node

instead of Appearance. This allows you to declare KambiAppearance

by EXTERNPROTO, that fallbacks on standard Appearance,

and thus bump mapping extensions will be gracefully omitted by other

browsers. See VRML/X3D demo models for examples.

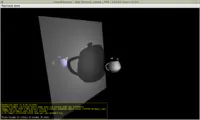

3. Texture automatically rendered from a viewpoint (RenderedTexture node)

|

|

Texture rendered from a specified viewpoint in the 3D scene. This can be used for a wide range of graphic effects, the most straightforward use is to make something like a "security camera" or a "portal", through which a player can peek what happens at the other place in 3D world.

RenderedTexture : X3DTextureNode {

SFNode [in,out] metadata NULL # [X3DMetadataObject]

MFInt32 [in,out] dimensions 128 128 4 1 1

SFString [in,out] update "NONE" # ["NONE"|"NEXT_FRAME_ONLY"|"ALWAYS"]

SFNode [in,out] viewpoint NULL # [X3DViewpointNode] (VRML 1.0 camera nodes also allowed)

SFNode [] textureProperties NULL # [TextureProperties]

SFBool [] repeatS TRUE

SFBool [] repeatT TRUE

SFBool [] repeatR TRUE

MFBool [in,out] depthMap []

SFMatrix4f [out] viewing

SFMatrix4f [out] projection

SFBool [out] rendering

}

First two numbers in "dimensions" field specify

the width and the height of the texture. (Our

current implementation ignores the rest of dimensions field.)

"update" is the standard field for automatically generated

textures (works the same as for GeneratedCubeMapTexture or GeneratedShadowMap).

It says when to actually generate the texture:

"NONE" means never,

"ALWAYS" means every frame (for fully dynamic scenes),

"NEXT_FRAME_ONLY" says to update at the next frame (and

afterwards change back to "NONE").

"viewpoint" allows you to explicitly specify viewpoint

node from which to render to texture. Default NULL value

means to render from the current camera (this is equivalent to

specifying viewpoint node that is currently bound). Yes, you can easily

see recursive texture using this, just look at

the textured object. It's quite fun :) (It's not a problem for rendering

speed — we always render texture only once in a frame.)

You can of course specify other viewpoint

node, to make rendering from there.

"textureProperties" is the standard field of all texture nodes.

You can place there a TextureProperties node

to specify magnification, minification filters

(note that mipmaps, if required, will always be correctly automatically

updated for RenderedTexture), anisotropy and such.

"repeatS", "repeatT", "repeatR"

are also standard for texture nodes,

specify whether texture repeats or clamps. For RenderedTexture,

you may often want to set them to FALSE. "repeatR"

is for 3D textures, useless for now.

"depthMap", if it is TRUE, then the generated texture

will contain the depth buffer of the image (instead of the color buffer

as usual). (Our current implementation only looks at the first item of

MFBool field depthMap.)

"rendering" output event sends a TRUE value right

before rendering to the texture, and sends FALSE after.

It can be useful to e.g. ROUTE this to a ClipPlane.enabled field.

This is our (Kambi engine) extension, not present in other implementations.

In the future, "scene" field will be implemented, this will

allow more flexibility, but for now the simple "rendering" event

may be useful.

"viewing" and "projection" output events are

also send right before rendering, they contain the modelview (camera)

and projection matrices.

TODO: "scene" should also be supported.

"background" and "fog" also. And the default

background / fog behavior should change? To match the Xj3D,

by default no background / fog means that we don't use them,

currently we just use the current background / fog.

This is mostly compatible with InstantReality RenderedTexture and Xj3D, We do not support all InstantReality fields, but the basic fields and usage remain the same.

4. Generate texture coordinates on primitives (Box/Cone/Cylinder/Sphere/Extrusion/Text.texCoord)

We add a texCoord field to various VRML/X3D primitives.

You can use it to generate texture coordinates on a primitive,

by the TextureCoordinateGenerator node (for example

to make mirrors),

or (for shadow maps) ProjectedTextureCoordinate.

You can even use multi-texturing on primitives, by

MultiGeneratedTextureCoordinate node. This works exactly like

standard MultiTextureCoordinate, except only coordinate-generating

children are allowed.

Note that you cannot use explicit TextureCoordinate nodes

for primitives, because you don't know the geometry of the primitive.

For a similar reason you cannot use MultiTextureCoordinate

(as it would allow TextureCoordinate as children).

Box / Cone / Cylinder / Sphere / Extrusion / Text {

...

SFNode [in,out] texCoord NULL # [TextureCoordinateGenerator, ProjectedTextureCoordinate, MultiGeneratedTextureCoordinate]

}

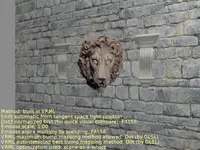

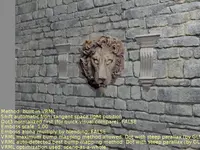

5. Generating 3D tex coords in world space (easy mirrors by additional TextureCoordinateGenerator.mode values)

|

TextureCoordinateGenerator.mode allows two additional

generation modes:

WORLDSPACEREFLECTIONVECTOR: Generates reflection coordinates mapping to 3D direction in world space. This will make the cube map reflection simulating real mirror. It's analogous to standard "CAMERASPACEREFLECTIONVECTOR", that does the same but in camera space, making the mirror reflecting mostly the "back" side of the cube, regardless of how the scene is rotated.WORLDSPACENORMAL: Use the vertex normal, transformed to world space, as texture coordinates. Analogous to standard "CAMERASPACENORMAL", that does the same but in camera space.

These nodes are extremely useful for making mirrors. See Cube map environmental texturing component and our VRML/X3D demo models for examples.

6. Tex coord generation dependent on bounding box (TextureCoordinateGenerator.mode = BOUNDS*)

Three more values for TextureCoordinateGenerator.mode:

BOUNDS: Automatically generate nice texture coordinates, suitable for 2D or 3D textures. This is equivalent to eitherBOUNDS2DorBOUNDS3D, depending on what type of texture is actually used during rendering.BOUNDS2D: Automatically generate nice 2D texture coordinates, based on the local bounding box of given shape. This texture mapping is precisely defined by the VRML/X3D standard atIndexedFaceSetdescription.BOUNDS3D: Automatically generate nice 3D texture coordinates, based on the local bounding box of given shape. This texture mapping is precisely defined by the VRML/X3D standard at Texturing3D component, section "Texture coordinate generation for primitive objects".

Following VRML/X3D standards, above texture mappings are

automatically used when you supply a texture but no texture coordinates for your

shape. Our extensions make it possible to also explicitly use these mappings,

when you really want to explicitly use TextureCoordinateGenerator node.

This is useful when working with multi-texturing (e.g. one texture unit

may have BOUNDS mapping, while the other texture unit has different mapping).

7. Override alpha channel detection (field alphaChannel for ImageTexture, MovieTexture and other textures)

DEPRECATED: Instead of using this, consider setting Appearance.alphaChannel. Using Appearance.alphaChannel is a straightforward way of influencing how the alpha is treated at rendering a particular Appearance.

|

Our engine detects the alpha channel type of every texture automatically. There are three possible situations:

- The texture has no alpha channel (it is always opaque), or

- the texture has simple yes-no alpha channel (transparency rendered using alpha testing), or

- the texture has full range alpha channel (transparency rendered by blending, just like partially transparent materials).

The difference between these cases is detected by analyzing alpha channel values.

Developers: see

TEncodedImage.AlphaChannel.

There is also a special program in engine sources (see examples/images_videos/image_identify.lpr

demo) if you want to test this algorithm yourself.

You can also see the results for your textures if you run

view3dscene with --debug-log option.

Sometimes you want to override results of this automatic detection. For example, maybe your texture has some pixels using full range alpha but you still want to use simpler rendering by alpha testing (that doesn't require sorting, and works nicely with shadow maps).

If you modify the texture contents at runtime (for example by scripts,

like demo_models/castle_script/edit_texture.x3dv

in demo models)

you should also be aware that alpha channel detection happens only once.

It is not repeated later, as this would be 1. slow 2. could cause

weird rendering changes. In this case you may also want to force

a specific alpha channel treatment, if initial texture contents

are opaque but you want to later modify it's alpha channel.

To enable this we add new field to all texture nodes

(everything descending from X3DTextureNode,

like ImageTexture, MovieTexture; also Texture2

in VRML 1.0):

X3DSingleTextureNode {

... all normal X3DSingleTextureNode fields ...

SFString [] alphaChannel "AUTO" # "AUTO", "NONE", "TEST" or "BLENDING"

}

Value AUTO means that automatic detection is used, this

is the default. Other values force the specific alpha channel treatment

and rendering, regardless of initial texture contents.

8. Movies for MovieTexture can be loaded from images sequence

Inside MovieTexture nodes, you can use an URL like

my_animation_@counter(1).png to load movie from a sequence of images.

This will load a series of images.

We will substitute @counter(<padding>)

with successive numbers starting from 0 or 1 (if filename

my_animation_0.png exists,

we use it; otherwise we start from my_animation_1.png).

The parameter inside @counter(<padding>)

macro specifies the padding.

The number will be padded with zeros to have at least the required length.

For example, @counter(1).png

results in names like 1.png, 2.png, ..., 9.png, 10.png...

While @counter(4).png results in names like 0001.png,

0002.png, ..., 0009.png, 0010.png, ...

A movie loaded from image sequence will always run at the speed of

25 frames per second. (Developers: if you use a class like

TGLVideo2D to play movies, you can customize

the TGLVideo2D.FramesPerSecond property.)

A simple image filename (without @counter(<padding>)

macro) is also accepted

as a movie URL. This just loads a trivial movie, that consists of one

frame and is always still...

Allowed image formats are just like everywhere in our engine — PNG, JPEG and many others, see Castle Image Viewer for the list.

Besides the fact that loading image sequence doesn't require ffmpeg installed, using image sequence has also one very important advantage over any other movie format: you can use images with alpha channel (e.g. in PNG format), and MovieTexture will be rendered with alpha channel appropriately. This is crucial if you want to have a video of smoke or flame in your game, since such textures usually require an alpha channel.

Samples of MovieTexture usage

are inside our VRML/X3D demo models, in subdirectory movie_texture/.

9. Texture for GUI (TextureProperties.guiTexture)

TextureProperties {

...

SFBool [] guiTexture FALSE

}

When the guiTexture field is TRUE, the texture is

not forced to have power-of-two size, and it never uses mipmaps. Good

for GUI stuff, or other textures where forcing power-of-two causes

unacceptable loss of quality (and it's better to resign from mipmaps).

10. Flip the texture vertically at loading (ImageTexture.flipVertically, MovieTexture.flipVertically)

We add a new field to ImageTexture and MovieTexture

to flip them vertically at loading.

ImageTexture or MovieTexture {

...

SFBool [] flipVertically FALSE

}

This parameter is in particular useful when loading a model from

the glTF format.

Our glTF loading code (that internally converts glTF into X3D nodes)

sets this field to TRUE for all textures referenced by the glTF file.

This is necessary for correct texture mapping.

Note that the decision whether to flip the texture is specific to this

ImageTexture / MovieTexture node.

Other X3D texture nodes (even if they refer to the same texture file)

do not have to flip the texture. Our caching mechanism intelligently accounts for it,

and knows that "foo.png with flipVertically=FALSE has different

contents than foo.png with flipVertically=TRUE".

Detailed discussion of why this field is necessary for interoperability with glTF:

glTF 2.0 specification about "Images" says explicitly (and illustrates it with an image) that the texture coordinate (0,0) corresponds to the upper-left image corner.

As far as I know (glTF issue #1021, glTF issue #674, glTF-Sample-Models issue #82) this is compatible at least with glTF 1.0, WebGL and Vulkan. I am not an expert about WebGL, and I was surprised that WebGL does something differently than OpenGL(ES) — but it seems to be the case, after reading the above links.

In contrast, X3D expects that the texture coordinate (0,0) corresponds to the bottom-left image corner. See the X3D specification "18.2.3 Texture coordinates".

This is consistent with OpenGL and OpenGLES, at least if you load the textures correctly, following the OpenGL(ES) requirements: glTexImage2D docs require that image data starts at a lower-left corner.

How to reconcile these differences? Three solutions come to mind:

Use a different shader for rendering glTF content, that transforms texture coordinates like

texCoord.y = 1 - texCoord.y.I rejected this idea, because 1. I don't want to complicate shader code by a special clause for glTF, 2. I don't want to perform at runtime (each time you render a pixel!) something that could otherwise be done at loading time.

At loading, process all texture coordinates in glTF file, performing the

texCoord.y = 1 - texCoord.yoperation.I rejected this idea, because it would make it impossible to load buffers (containing per-vertex data) straight from glTF binary files into GPU. In other words, we would lose one of the big glTF advantages: maximum efficiency in transferring data to GPU.

At loading, flip the textures vertically. This means that original texture coordinates can be used, and the result is as desired.

I chose this option. Right now our image loaders just flip the image after loading, but in the future they could load a texture already flipped. This is possible since many image formats (like PNG, BMP) actually store the lines in top-to-bottom order already.

So, this solution makes it possible to have efficient loading of textures, and of glTF.

Bonus: This allows to support KHR_texture_transform without any headaches. Since we flipped the textures, so glTF texture coordinates and texture (coordinate) transformations "just work" without any additional necessary convertion. There's no need to e.g. flip offset.y given in KHR_texture_transform, there's no need to worry how it can interact with negative scale.y in KHR_texture_transform.

Why only for ImageTexture / MovieTexture?

glTF 2.0 assumes only 2D texture images. So we do not bother with a similar solution for 3D texture nodes, or cube-map texture nodes.

Future glTF versions may add more texture types, they may also add some fields to declare the orientation of texture coordinates. Until then, our current solution is directed at handling 2D textures in glTF 2.0 correctly, nothing more is necessary.

11. Multi-texture with only generated children (MultiGeneratedTextureCoordinate)

MultiGeneratedTextureCoordinate : X3DTextureCoordinateNode {

SFNode [in,out] metadata NULL # [X3DMetadataObject]

SFNode [in,out] texCoord NULL # [TextureCoordinateGenerator, ProjectedTextureCoordinate]

}

This node acts just like standard MultiTextureCoordinate

but only generated texture coordinates (not explicit texture coordinates)

are allowed.

It it useful to allow in texCoord of geometry nodes

that do not expose explicit coordinates, but that allow to use generated

texture coords:

- Box,

- Cone,

- Cylinder,

- Sphere,

- Extrusion,

- Text,

- Teapot.